Bipolar Sigmoid Activation Function

Posted By admin On 13.01.20Understanding neural network activation functions is essential whether you use an existing software tool to perform neural network analysis of data or write custom neural network code. This article describes what neural network activation functions are, explains why activation functions are necessary, describes three common activation functions, gives guidance on when to use a particular activation function, and presents C# implementation details of common activation functions. The best way to see where this article is headed is to take a look at the screenshot of a demo program in Figure 1. The demo program creates a fully connected, two-input, two-hidden, two-output node neural network. After setting the inputs to 1.0 and 2.0 and setting arbitrary values for the 10 input-to-hidden and hidden-to-output weights and biases, the demo program computes and displays the two output values using three different activation functions. Click on image for larger view.

Figure 1. The activation function demo. The demo program illustrates three common neural network activation functions: logistic sigmoid, hyperbolic tangent and softmax. Using the logistic sigmoid activation function for both the input-hidden and hidden-output layers, the output values are 0.6395 and 0.6649.

The same inputs, weights and bias values yield outputs of 0.5006 and 0.5772 when the hyperbolic tangent activation function is used. And the outputs when using the softmax activation function are 0.4725 and 0.5275. This article assumes you have at least intermediate-level programming skills and a basic knowledge of the. The demo program is coded in C#, but you shouldn't have too much trouble refactoring the code to another language if you wish. To keep the main ideas clear, all normal error checking has been removed.

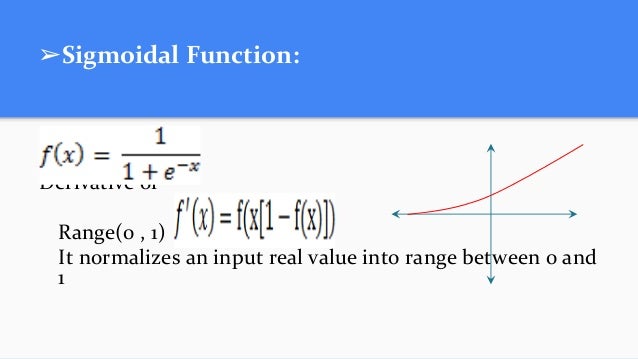

Published: Mon 13 November 2017 By In. An activation function is used to introduce non-linearity to a network. This allows us to model a class label / score that varies non-linearly with independent variables. Non-linear means the output cannot be replicated from a linear combination of inputs, this allows the model to learn complex mappings from the available data, and thus the network becomes a, whereas, a model which uses a linear function (i.e. No activation function) is unable to make sense of complicated data, such as, speech, videos, etc. And is effective for only a single layer. Another important aspect of the activation function is that it should be differentiable.

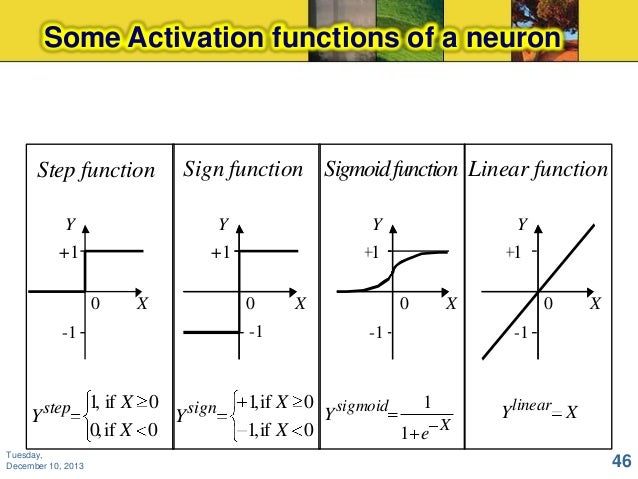

This is required when we backpropagate through our network and compute gradients, and thus tune our weights accordingly. The non-linear functions are continuous and transform the input (normally, however, these values get beyond their original scale once they get multiplied with their respective weights) in the range ((0, 1) ), ((-1, 1) ), etc. In a neural network, it is possible for some neurons to have linear activation functions, but they must be accompanied by neurons with non-linear activation functions in some other part of the same network. Although any non-linear function can be used as an activation function, in practice, only a small fraction of these are used. Listed below are some commonly used activation functions along with a Python snippet to create their plot using and: Binary step. $$a^ij = f(x^ij) = tanh(x^ij)$$ The ( tanh ) non-linearity compresses the input in the range ((-1, 1) ).

Bipolar Sigmoid Activation Function Diagram

It provides an output which is zero-centered. So, large negative values are mapped to negative outputs, similarly, zero-valued inputs are mapped to near zero outputs. Also, the gradients for ( tanh ) are steeper than sigmoid, but it suffers from the.

( tanh ) is commonly referred to as the scaled version of sigmoid, generally this equation holds: ( tanh(x) = 2 sigma(2x) - 1 ) An alternative equation for the ( tanh ) activation function is. $$a^ij = f(x^ij) = max(0, x^ij)$$ A rectified linear unit has the output (0 ) if its input is less than or equal to (0 ), otherwise, its output is equal to its input. It is also more. This has been widely used in. It is also superior to the sigmoid and ( tanh ) activation function, as it does not suffer from the vanishing gradient problem.

Thus, it allows for faster and effective training of deep neural architectures. Game maker text box engineering. However, being non-differentiable at (0 ), ReLU neurons have a tendency to become inactive for all inputs i.e. They die out. This can be caused by high learning rates, and can thus reduce the model’s learning capacity.

Sigmoid Function Examples

Sigmoid Curve Function

This is commonly referred to as the “” problem.